The Soul of the Writer

on LLMs, the psychology of writers, and the nature of intelligence

“GPT is not like us — but it is of us. It has read more of humanity's writing than any human, ever, by orders of magnitude. All of its behaviors, good and bad, hold up a mirror to the human soul.”

There has been a lot of talk about how GPT-4 and its ilk represent “a mirror of the human soul” or a kind of distillation of our nature. The assumption here is that mankind’s corpus captures our essence and that large language models (LLMs) are grokking this essence as they “read” the sum total of our writing. There are a multitude of reasons why this is a bad assumption, but here are two that I find interesting.

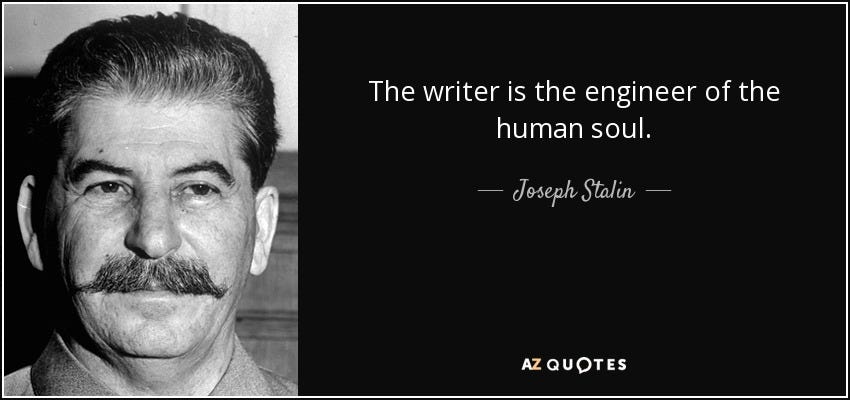

While many of the noblest minds that have ever graced the planet are counted among the ranks of the writers, we shouldn’t imagine that these outliers represent the norm (beware availability bias). Comparing all those who have ever taken up the quill/pen (the scholars, the journalists, the propagandists, the 2nd rate novelists) to all of those who haven’t (at least not in any way that made it into the LLM corpus), I think we would find certain types of people to be overrepresented in the former group: the loudmouths, the blowhards, the know-it-alls, the self-aggrandizers, the attention-seekers, the fanatics (but of course none of these labels apply to yours truly). Consider the people who spend the most time bloviating into the digital aether: are these the wisest and most level-headed among us?

If you met someone who embodied the “soul of the writer” you would probably think they were an asshole (…so don’t be surprised when an LLM acts like one).

Assholes maybe, but at least your average writer is more intelligent than the average person, right? Sure, if you believe that symbolic reasoning is the highest form of human intelligence.

What about the intelligence of the body—the cunning of the muscle, the intuition of the gut, the wisdom of the loins? Conscious cognition is only the tip of the iceberg, a small cap on a much vaster intellect that permeates our entire being.

“Encoded in the large, highly evolved sensory and motor portions of the human brain is a billion years of experience about the nature of the world and how to survive in it. The deliberate process we call reasoning is, I believe, the thinnest veneer of human thought, effective only because it is supported by this much older and much more powerful, though usually unconscious, sensorimotor knowledge. We are all prodigious olympians in perceptual and motor areas, so good that we make the difficult look easy. Abstract thought, though, is a new trick, perhaps less than 100 thousand years old. We have not yet mastered it. It is not all that intrinsically difficult; it just seems so when we do it.”

This argument is known as Moravec’s paradox; a more explicit formulation:

We should expect the difficulty of reverse-engineering any human skill to be roughly proportional to the amount of time that skill has been evolving in animals.

The oldest human skills are largely unconscious and so appear to us to be effortless.

Therefore, we should expect skills that appear effortless to be difficult to reverse-engineer, but skills that require effort may not necessarily be difficult to engineer at all.

Some examples of skills that have been evolving for millions of years: recognizing a face, moving around in space, judging people's motivations, catching a ball, recognizing a voice, setting appropriate goals, paying attention to things that are interesting; anything to do with perception, attention, visualization, motor skills, social skills and so on.

Some examples of skills that have appeared more recently: mathematics, engineering, games, logic and scientific reasoning. These are hard for us because they are not what our bodies and brains were primarily evolved to do. These are skills and techniques that were acquired recently, in historical time, and have had at most a few thousand years to be refined, mostly by cultural evolution.

So here is another reason that we should be worried about artificial intelligence: it represents the concentrated extract of the “dumbest” (i.e. the least evolutionarily-optimized) part of our minds. In creating LLMs, we’ve essentially taken a buggy prototype, scaled it up, and rushed it to market.

Human intelligence is augmented by what Gregg calls our “additional cognitive sprinkles”. These include language, theory of mind, causal inference, our capacity for moral reasoning, episodic foresight (the ability to mentally project ourselves into the future to simulate imagined events) and death wisdom (an awareness of your own mortality). But, he argues, it is the very complexity of our intelligence that may make us less successful in evolutionary terms, destined to vanish from the Earth before other species, such as crocodiles, who are stupider but have been around for millions of years. What is the point of all our intellectual achievements, Gregg asks, if we go extinct after a mere 300,000? If climate change is to be our downfall, then human intelligence may just turn out to be “the stupidest thing that has ever happened”.

— Review: If Nietzsche were a Narwhal by Justin Gregg

If AI is to be our downfall, then creating it may just turn out to be the stupidest thing that us know-it-alls ever did.

This is one of the most interesting commentaries I've seen on the subject. And I've read many. My largest concern has been how so many people seem to give LLM AI's credit and expectation for far more than they are capable of. They revere and fear them as humans and don't seem able to comprehend the differences.

As you've pointed out, the differences are marked and important ones.

I have far less fear of what the AI's will do than of what humans will do - in general, and in relation to the AI's abilities and potential consequences.

We shall see... As in all of life, we occupy a ringside seat to watch the great unknown of what's to come. This will be an interesting show.

Love the title and concept 👌