Ex Machina and Embodied Cognition

I recently re-watched the movie Ex Machina again and remembered how good it was. I highly recommend giving it a watch if you are at all interested in AI/science-fiction/really good movies.

There is a plot point, really it’s just a few lines, that I find so fascinating and kind of profound – when he said them, I remembered in a flash how I was struck by these lines in the same way when he said it the first time. In the movie’s world, “Blue Book” is basically their version of Google. The CEO is this mad scientist-type guy named Nathan Bateman who uses the data from Blue Book to create an artificial intelligence, which of course he then puts into the body of a hot robot chick named Ava (not making this up). Bateman is never super specific about how he created the AI, but he does suggest the general strategy he used.

“Here's the weird thing about search engines. It was like striking oil in a world that hadn't invented internal combustion. Too much raw material. Nobody knew what to do with it. You see, my competitors, they were fixated on sucking it up and monetizing via shopping and social media. They thought that search engines were a map of what people were thinking. But actually they were a map of how people were thinking. Impulse. Response. Fluid. Imperfect. Patterned. Chaotic.”

Search engines are a map of how we think.

Have you ever typed the beginning of a question into google and looked at what the autofill responses are? I did a little experiment by typing in three very fundamental questions and seeing what showed up as autofill recommendations. I think the results tell us a lot about human nature.

What does this tell us about human nature?

“Huh, there is this big blue dome that hangs over the world all the time. I wonder why it is blue.”

We are curious creatures to our core. But also it’s kinda impossible to ignore, because the sky is just, you know, up there, like all the goddamn time. And sometimes good shit falls out of the sky and sometimes bad shit so obviously we are curious about it.

So naturally humans are also very interested in weather, especially less typical and more dangerous weather like snow. Of course we like to search about abnormal weather patterns, especially in Texas of all places.

It’s also especially interesting to us when the snow causes power to go out. It’s all very primal – “Snow bad. Snow make fire go away. Need fire, no freeze.” I had two job interviews today – it was snowing today so obviously we started both interviews with a quick chat about the weather.

And last, but not least, people are asking about their money – their resources, their food, their shelter. Again, very primitive– “me need food, me need shelter. You no take.”

What does this tell us about human nature?

Again, we are curious and we like money.

Rush Limbaugh represents a few things that grab our interest more than almost anything. He was famous and rich – a very important monkey in the tribe. He’s also recently dead which is why I am saying was and not is. Death is simultaneously terrifying and morbidly fascinating to us. Humans praise the recently deceased, but also we really like talking shit about people, especially after they are dead and can’t respond to us. There is a lot of shit to talk about Rush Limbaugh, too.

"Rush Limbaugh had a radio segment called "AIDS Update" where he'd read out the names of gay people who had died and celebrate with horns and bells. So the whole 'don't speak ill of the dead' thing doesn't apply to this absolute f****** monster. Let him rot."

And last, but not least, we hate when things are hidden from us and we want to know the secret, even if it’s some stupid show where D-list celebrities where a mask and sing songs.

This is equal parts unsurprising, hilarious, and illuminating.

Illuminating because it is such a raw demonstration of embodied cognition. Human minds, and all minds that we know of, are embedded in a fleshy body that constantly moves and perceives the world, both internally (the feeling we get when our stomach is turning) and externally. Embodied cognition means a lot of things (at least six), but fundamentally it just refers to the fact that many features of our cognition are shaped by the physicality of our bodies and its interactions with the world. When you hear or see running water and it makes it easier for you to pee - that’s embodied cognition. Another simple illustration of embodied cognition is the way that we often use conceptual metaphors about moving around in a physical landscape to represent abstract knowledge and thinking – “a field of knowledge”, “broadening your horizons”, “letting your mind wander”, “a study guide”, a “train of thought”. It’s not hard to imagine why our brains evolved to do this from an evolutionary perspective; for eons most of our most important knowledge was mapped to physical location – go there to get water, we saw animals to hunt over there, that area is dangerous, our enemies live in that direction. It’s interesting to note that instead of fields of knowledge we also say branches of knowledge - is this a relic of our ancient tree-dwelling past? This is just the quickest introduction to the idea of embodied cognition (it’s not really a theory, more of a perspective or research program); I will write much more about it in the future, especially the connection between physical landscapes and mental landscapes.

Embodied cognition raises some very fundamental questions about the nature of AI and future prospects for strong AI – Is it possible to create a mind that is somehow fully disembodied? Is that desirable? Is it easier to create a disembodied mind than an embodied mind? Does having a body require that you have the ability to suffer and feel pain? Is it ethical to create that type of entity? Will we do it anyways even if it is not?

I have a feeling that there is something very prophetic about the notion of using search engine data to create an AI (hopefully this is the only part of Ex Machina that’s prophetic…). As evidenced in the simplest way possible in my experiment above, there might be a way to use search engines to learn about what it feels like to be living breathing being with a fragile and imperfect body. How we embed this knowledge in an AI in a meaningful way, I don’t know. I’ll leave that to my minions, I’m more of a 10,000 feet, bird’s eye view, big picture type of guy (I kid, I kid… but not really).

To no one’s surprise, I found a study that feels like a first step in that direction. They used google image search to discern what it truly feels like for a human to see color - the emotions, the abstract associations, the meaning.

I don’t know what will be worse - The AI apocalypse or AI art critics that have superhuman levels of snobbiness and pretension.

The study, “Color Associations in Abstract Semantic Domains” was published in August 2020 in Cognition (which I assume is a really good journal based on the simplicity of the name – see Science and Nature). I’ll quote the article enough to give you a flavor of what they did and comment, but really you should just read it here.

The beginning sections of the introduction do a great job of concisely describing the background, motivation, significance, and novelty of the methods and I can’t really do any better so here it is:

“Color has been harnessed as a means of communication throughout human history, as evidenced by its use in painting, poetry, fashion, architecture, and marketing (Lakoff & Turner, 1989; Riley, 1995; Labrecque & Milne, 2012). Indeed, recent work shows that color can prime a range of attentional, emotional, and interpretive responses (Hill & Barton, 2005; Mehta & Zhu, 2009; Labrecque & Milne, 2012; Elliot & Maier, 2014). Color is also frequently used in linguistic metaphors to describe concepts across varying levels of abstraction, including those that lack direct visible referents (e.g., “I could play the blues and then not be blue anymore” — B.B. King) (Lakoff & Turner, 1989; Winter et al., 2018; Winter, 2019). A number of studies have found that linguistic metaphors can activate neurocognitive processes similar to those involved in forms of synaesthesia that ascribe colors to letters or numbers (Marks, 1982; O’Dowd et al., 2019). These findings are consistent with theories of multimodal cognition which argue that the metaphorical use of sensory data in everyday language indicates that sensory data plays a key role in the semantics of both concrete and abstract concepts (Tanenhaus et al., 1995; Barsalou, 2003, 2010; Gallese & Lakoff, 2005; Bergen, 2012; Dancygier & Sweetser, 2012).

However, popular theories maintain that the content and structure of concrete and abstract concepts are qualitatively different (Wiemer‐Hastings & Xu, 2005; Binder et al., 2005; Brysbaert et al., 2014). Concepts are said to be concrete if their meanings involve perceivable features and referents (e.g. the concept “dog”), whereas abstract concepts are said to lack specific referents in sensory experience and to convey meanings that depend on theoretical knowledge (e.g. the concept “democracy”). A number of theories hold that abstract concepts are defined by formal, logical relations whose structure is independent of sensory data (Newell et al., 1989; Jackendoff, 2002; Chatterjee, 2010). For example, nativists frequently argue that abstract concepts are defined a priori by an internal formal language (Fodor, 1975; Laurence & Margolis, 2002; Medin & Atran, 2004), and connectionists view abstract concepts as amodal graph-theoretic objects (Tenenbaum et al., 2011). Recent work attempts to reconcile these views in proposing that while abstract concepts may not be represented using sensory data, they may be embodied through associations with emotion (Vigliocco et al., 2009; Kousta et al., 2011; Troche et al., 2014, 2017). Thus, there is ongoing debate about whether color (and sensory data in general) contributes to semantic associations between abstract concepts.

The recent emergence of ‘multimodal’ approaches in natural language processing (NLP) – i.e. approaches that integrate both text and images – hold promise in advancing this inquiry. These developments mark a critical advance upon largely text-based approaches, such as the qualitative identification of verbal metaphors (Lakoff & Turner, 1989; Gallese & Lakoff, 2005), and the quantitative measure of co-occurrence patterns among words in text using LDA (Blei & Ng, 2003) or word2vec (Mikolov et al., 2013). New models of multimodal distributional semantics show how images can be used to infer semantic associations among words (Bruni et al., 2014); however, these methods exploit a large number of (often uninterpretable) features to associate words with images (Deng et al., 2010; Lindner et al., 2012; Bruni et al., 2014). Moreover, these approaches are not explicitly designed to encode information that is relevant for human semantic processing. For example, they often operate in RGB colorspace, which does not accurately represent human color perception (International Commission on Illumination 1978; Safdar et al., 2017).

Here, we develop a computational method for quantifying statistical relationships between words and their color distributions, which we measure using a state-of-the-art transformation of colorspace that accurately captures human color perception (referred to as a perceptually uniform colorspace) (Safdar et al., 2017). We infer hierarchical clustering among words according to the perceptually uniform color distributions of their Google Image search results. By examining patterns of image production and search mediated by human users, and by representing color in a perceptually grounded fashion, our analyses are interpretable with respect to cognition (Lupyan & Goldstone, 2019).”

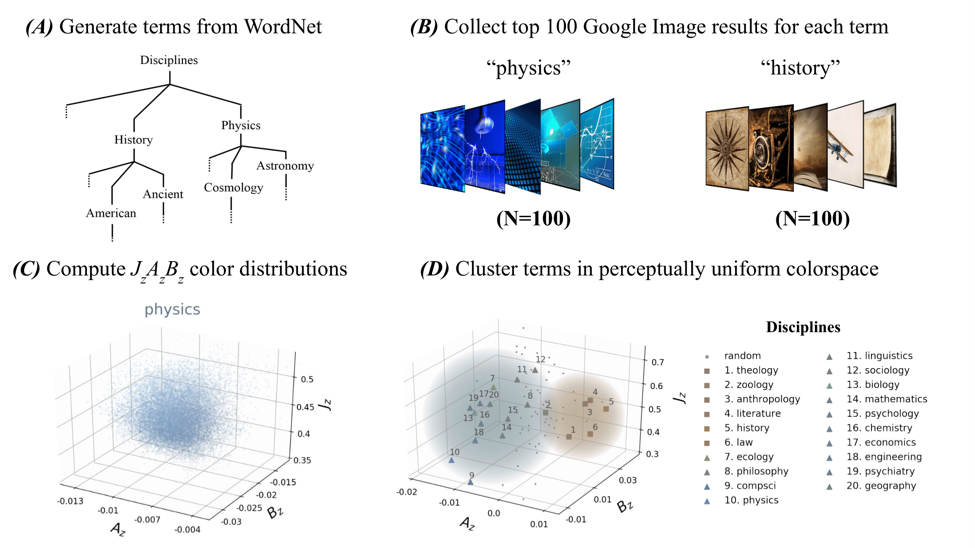

They concisely explain the methods in this figure and caption.

Fig. 1. Visualization of our analysis pipeline. (A) For each domain (e.g., disciplines), we generate search terms from the lexical database WordNet. (B) For each term within a domain (e.g., “physics”), we collect the top 100 Google Image search results. (C) Next, we transform each sRGB color distribution into the perceptually uniform JzAzBz colorspace. (D) We then average the color distributions over images for each search term to obtain aggregate perceptually uniform color distributions, and we compute the Jensen-Shannon divergence between the color distributions for pairs of terms to compare and cluster words in perceptually uniform colorspace. In panel (D), we visualize the domain of academic disciplines using the mean JzAzBz coordinates among Google Image search results for each word. Points are colored according to their mean JzAzBz coordinate. Spheres indicate clusters returned by our hierarchical analysis. Small markers indicate a sample of random WordNet nouns.

If you get the gist then feel free to skip these next sections, but here’s a little more from the methods section.

“For each domain (e.g. disciplines), we extract search terms from WordNet. Then, we collect the top 100 Google Image search results for each term in each domain (e.g. “physics”, “jazz”, “love”). We compute the aggregate perceptually uniform color distributions for each term by averaging over its images, and we compare the color distributions of terms in each abstract domain. We cluster terms in each domain using the Jensen–Shannon (JS) divergence between their color distributions, and we compare JS divergence to measures of semantic similarity. We now describe each step in detail (Fig. 1). Our analyses can be reproduced and extended using the Python package comp-syn (“Computational-Synesthesia”).”

2.2. Semantic Domain and Search Term Construction.

We identify search terms for each domain by retrieving the hyponyms from WordNet corresponding to disciplines, emotions, and music genres (e.g. “classical” for music genres). We associate each term with an abstractness score based on its hierarchical position in WordNet (2.5. Domain Analysis). We compare results from these abstract taxonomies to a concrete domain by examining a sample of four subdomains of the animal hierarchy in WordNet – i.e., dogs, cats, horses, and marsupials. The domain of animals was selected for this analysis because, prima facie, it provides a representative example of a widely familiar concrete domain. These abstract domains were chosen because of their treatment in recent studies of concept concreteness (Brysbaert et al., 2014; Troche et al., 2017).

The discussion is short, which I greatly appreciate, and you get why it is so short when you understand the results. The best science is usually so evident and elegant that it doesn’t need much exposition.

In this study, we find not only that abstract concepts are associated with colors in online images, but also that these color associations can encode relationships that have been traditionally viewed as symbolic and amodal in nature, such as hierarchical structure and semantic similarity. These results provide novel evidence for the embodied view of cognition by suggesting that even abstract properties of semantic architecture, such as set-theoretic relations in lexical hierarchies, can participate in a multimodal interface with sensory information. As such, these findings reveal a new way to synthesize extant accounts of embodied cognition by suggesting that color can encode both metaphorical mappings (Gallese & Lakoff, 2005) and affective relations (Kousta et al., 2011; Troche et al., 2014, 2017) simultaneously. Here we discuss the ability for our method to identify aesthetic coherence in language, which we define as the use of sensory data to encode both logical and affective dimensions of semantic domains.

Patterns of aesthetic coherence are evident in the t-SNE visualization of abstract concepts according to their color distributions, displayed in fig. 4B. For example, in the domain of disciplines, emotions associated with reddish hues – “Love”, “Anger”, “Affection”, and “Passion” – cluster with qualitative disciplines, including “Literature”, “Anthropology”, “History”, and “Law.” By contrast, quantitative disciplines associated with bluish hues do not cluster with any of the emotion terms examined, consistent with empirical research indicating that bluish hues prime competence and rationality, rather than being highly emotive (Alberts & Geest, 2011; Labrecque & Milne, 2012; Mehta & Zhu, 2009). These results indicate that color can partition subclasses within abstract domains (e.g. qualitative and quantitative disciplines), while also reflecting affective contrasts among subclasses. Thus, while color associations among concrete concepts are likely mediated by intrinsic properties of objects, we find that color associations among abstract concepts can be mediated by non-arbitrary affective dimensions.

We pose a similar question regarding music: are color associations among music genres correlated with color associations among the emotions evoked by these genres? Excitingly, we find that genres which conventionally evoke aggressive and ‘dark’ themes (e.g. “Metal” and “Punk Rock”) cluster near the emotion “Fear” (fig. 4B). Meanwhile, genres which conventionally evoke romantic themes (e.g. “Latin”) cluster near the emotion “Desire” (Zentner et al., 2008).

Investigating the extent to which representations encoded by online search engines reflect representations at the individual level is a promising area for future study. Future experiments on the priming effects of abstract color associations are well-positioned to inform several unsolved puzzles (Mohammad, 2011), such as the finding that abstract concepts are cognitively retrieved more quickly than concrete concepts (Kousta et al., 2011; Vigliocco et al., 2009). Given that emotional valence has been proposed as an explanation of this finding (Kousta et al., 2011), we posit that aesthetic coherence may provide an important piece of the theoretical framework needed to understand such results more broadly.

Cultural biases may also contribute to the color associations we report. Notably, color associations among academic disciplines are correlated with cultural biases in gender representation and expression among the disciplines examined (Frassanito & Pettorini, 2008). Future work that applies our methods across cultures will provide insights into the potential universality of aesthetic coherence (Malt, 1995; Chloe et al., 2013; Youn et al., 2016).

I don’t have enough expertise to evaluate the methods and results in a truly critical manner, but this is clearly a fascinating approach to understanding fundamental aspects of embodied cognition in humans. Studies like this might already exist for all I know, but I wouldn’t be surprised if research of this nature yields really deep insights about human psychology in the future, insights which may or may not be useful for the development of artificial intelligence.